请注意,本文编写于 270 天前,最后修改于 270 天前,其中某些信息可能已经过时。

目录

环境配置

三台机器相同配置:

| 系统 | CPU | 内存 | 硬盘 |

|---|---|---|---|

| Centos 7 | 2C | 2G | 20G |

- 采用

kubeadm部署

所有节点执行:

SH# 1、关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

# 2、关闭selinux

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

# 3、关闭swap(临时关闭)

swapoff -a

# 4、设置网桥参数

cat << EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

# 5、修改主机名

hostnamectl set-hostname master # 主节点

hostnamectl set-hostname node01 # node01节点

hostnamectl set-hostname node02 # node02节点

# 6、添加主机域名信息(三台机器执行)

cat >> /etc/hosts <<EOF

192.168.44.136 master

192.168.44.141 node01

192.168.44.142 node02

EOF

部署docker

| docker版本 | 镜像源 |

|---|---|

| 26.1.3 | 阿里云 |

- 可根据需求自行下载对应docker版本

所有节点安装:

sh# 1、安装依赖

yum install -y yum-utils device-mapper-persistent-data lvm2

# 2、配置阿里云 yum 源

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 3、下载docker

yum -y install docker-ce

# 4、创建 /etc/docker/ 目录

mkdir -p /etc/docker/

#设置国内阿里云docker源,地址改为自己在阿里云容器镜像服务申请的地址即可。

#设置cgroupdriver为systemd,这步尤为重要,如果不设置,后续k8s部署时会报错

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://40d9jgnm.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

# 5、重启docker

systemctl deamon-reload

systemctl restart docker

systemctl enable docker

# 检查环境

docker info

部署k8s

| k8s版本 | 镜像源 |

|---|---|

| 1.27.6 | 阿里云 |

- 可根据需求自行下载对应k8s版本

配置yum源

- 所有节点执行

shcat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

安装引导组件

kubelet:运行在cluster,负责启动pod管理容器kubeadm:k8s快速构建工具,用于初始化clusterkubectl:k8s命令工具,部署和管理应用,维护组件

sh# 主节点安装所有组件

yum install -y --nogpgcheck kubelet-1.27.6 kubeadm-1.27.6 kubectl-1.27.6

# 从节点安装 kubelet 和 kubeadm

yum install -y --nogpgcheck kubelet-1.27.6 kubeadm-1.27.6

启动kubelet

所有节点执行:

systemctl daemon-reload systemctl start kubelet systemctl enable kubelet

拉取初始化配置文件

- init-config 主要由 api server、etcd、scheduler、controller-manager、coredns等镜像构成

master节点执行:

shkubeadm config print init-defaults > init-config.yaml

# 修改刚才拉取的 init-config.yaml 文件,一共三处修改

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 1.2.3.4 #修改1 本机ip地址

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

imagePullPolicy: IfNotPresent

name: master #修改2 主机名

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers #修改3 修改为阿里云地址

kind: ClusterConfiguration

kubernetesVersion: 1.27.6

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler: {}

修改containerd

- 与我们之前配置docker镜像源的做法类似,在国内使用containerd依然需要更换成国内的镜像源

所有节点执行:

shcontainerd config default > /etc/containerd/config.toml

cat /etc/containerd/config.toml | grep -n "sandbox_image"

# 修改sandbox_image的值为 "registry.aliyuncs.com/google_containers/pause:3.6"

vim /etc/containerd/config.toml

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9" (这里改一下)

# 重启 containerd 服务

systemctl daemon-reload

systemctl restart containerd

拉取k8s相关镜像

shkubeadm config images pull --config=init-config.yaml

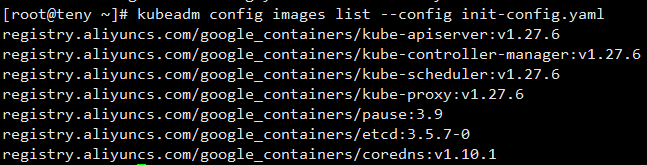

如果拉取失败,可以通过命令列出需要的镜像后逐个拉取

shkubeadm config images list --config init-config.yaml

初始化master节点

- 将

192.168.44.136替换为你自己的 master 节点

master节点执行:

shkubeadm init --apiserver-advertise-address=192.168.44.136 --apiserver-bind-port=6443 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --kubernetes-version=1.27.6 --image-repository registry.aliyuncs.com/google_containers --v=6

如果安装报错终止了

sh# 重置集群

kubeadm reset

# 排错之后再重新执行 init(--v=6可帮助我们看更详细的报错)

初始master成功

初始完成后会提示执行三个命令

master节点执行:

shmkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 在下面有让node节点加入集群的命令

初始化node节点

- 将node加入集群的命令复制下来

在所有node节点执行:

sh# 注意不要有换行标志,不让会报错

kubeadm join 192.168.44.136:6443 --token w34ha2.66if2c8nwmeat9o7 --discovery-token-ca-cert-hash sha256:20e2227554f8883811c01edd850f0cf2f396589d32b57b9984de3353a7389477

部署网络插件

- Flannel是CoreOS团队针对Kubernetes设计的一个网络规划服务,简单来说,它的功能是让集群中的不同节点主机创建的Docker容器都具有全集群唯一的虚拟IP地址

sh# 下载

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# 应用

kubectl apply -f kube-flannel.yml

这时候可以看到多了三个pod

shkubectl get po -A

如果下载不下来,新建 yaml 手动复杂粘贴

yaml---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

#image: flannelcni/flannel-cni-plugin:v1.1.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

#image: flannelcni/flannel:v0.20.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.20.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

#image: flannelcni/flannel:v0.20.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.20.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

本文作者:春天y

本文链接:

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

目录